We'll guide you through the entire process of setting up the APIs and using them together, which has the potential to streamline your data workflow and save you a whole lot of time.

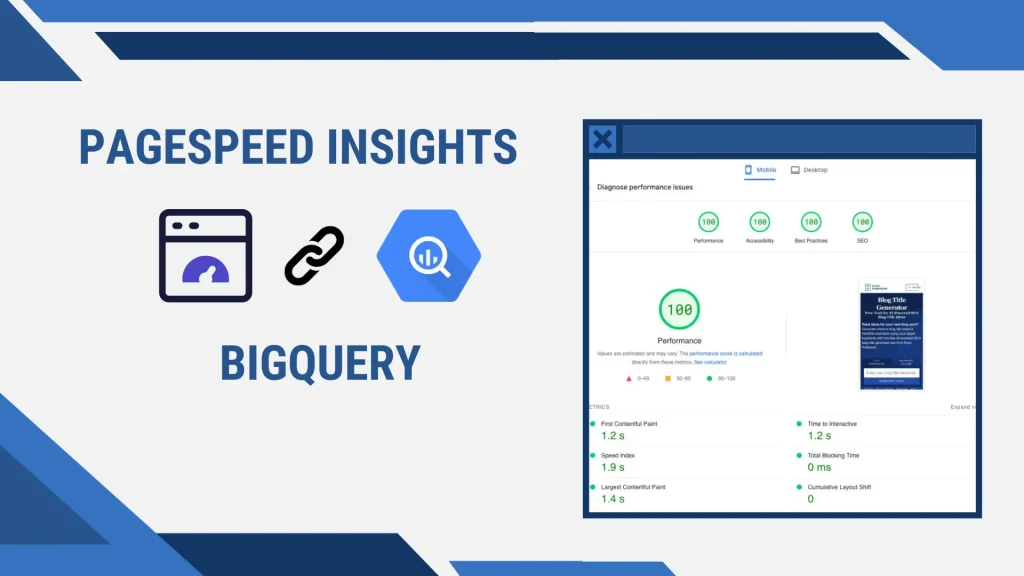

PageSpeed Insights (PSI) API

First of all, PSI is Google’s audit tool for measuring the performance of a webpage on both mobile and desktop devices. Furthermore, it outputs a score from 0 to 100 for each metric, along with suggestions on how to improve the performance.

Even more, it outputs the time it takes to load the page and shows how much each metric impacts the overall performance score.

Since this is Google’s tool, like many others it has an API, which we can enable and use inside the Google Cloud Console. We could also use the PSI API without an API key from Google Cloud, but we would likely get a failed response from time to time. In order to prevent that, you should use it with an API key.

To get the API key you need to follow these steps:

- Create a project on Google Cloud Console

- Find PageSpeed Insights API in the API library and enable it

- Create API key under Credentials tab by clicking on the CREATE CREDENTIALS button

- Use the PSI API by sending request to the following links:

- For mobile device results: https://www.googleapis.com/pagespeedonline/v5/runPagespeed?url=<WEBPAGE_URL>&strategy=mobile&locale=en&key=<YOUR_PSI_API_KEY>

- For desktop device results: https://www.googleapis.com/pagespeedonline/v5/runPagespeed?url=<WEBPAGE_URL>&strategy=mobile&locale=en&key=<YOUR_PSI_API_KEY>

Keep in mind that you need to input your own information in places of <WEBPAGE_URL> and <YOUR_PSI_API_KEY>.

BigQuery (BQ) API

BQ is an enterprise data warehouse, powered by Google cloud storage, which scales along with your data and enables you to process complex SQL queries through large datasets quickly.

However, setting up the API for BQ is a little more complicated than it was with PSI API. Furthermore, you should already have a project created on Google Cloud Console from when you were setting up PSI API, so we can move on to setting up the BigQuery API.

In order to get the API working, you’ll need to follow these steps:

- Go to API library and enable BigQuery API

- Go to Credentials and create a service account

- Download the JSON file and copy it in your project folder

- Copy the email address of your service account and go to IAM & Admin > IAM

- Under Permissions tab, click GRANT ACCESS and copy the service account email address in the New principals input field

- Assign BigQuery Admin role to the service account

- Click SAVE button

Now, you’re ready to start using the BigQuery API within your code.

Python implementation

We’re going to make a Python script, which we’ll be able to use straight from the command line. Therefore, we’ll need to implement an argument parser. We’re also going to conceal all private information in our directory by storing them in environment variables.

First of all let’s import all the necessary modules we’ll need for this project.

import os

import json

import argparse

import pandas as pd

import urllib.request

from urllib.parse import urlparse

from dotenv import load_dotenv

from google.cloud import bigqueryNext, we’ll store the environment variables within constants, so we read the .env and credentials JSON file only once.

load_dotenv()

ROOT = os.path.dirname(__file__)

os.environ['GOOGLE_APPLICATION_CREDENTIALS'] = os.path.join(ROOT, 'credentials.json')

PSI_API_KEY = os.getenv('PSI_API_KEY')Next, we’re going to implement a method for performing a PSI speed test for only one type of device. You need to keep in mind that this project is only for demonstration purposes, so we’ll be returning only the main Lighthouse 6 metrics.

def speed_test(url, strategy):

api_url = f"https://www.googleapis.com/pagespeedonline/v5/runPagespeed?url={url}&strategy={strategy}&locale=en&key={PSI_API_KEY}"

response = urllib.request.urlopen(api_url)

data = json.loads(response.read())

with open(os.path.join(ROOT, 'data.json'), 'w', encoding='utf-8') as f:

json.dump(data, f, ensure_ascii=False, indent=4)

# core web vitals

vitals = {

'FCP': data['lighthouseResult']['audits']['first-contentful-paint'],

'LCP': data['lighthouseResult']['audits']['largest-contentful-paint'],

'FID': data['lighthouseResult']['audits']['max-potential-fid'],

'TBT': data['lighthouseResult']['audits']['total-blocking-time'],

'CLS': data['lighthouseResult']['audits']['cumulative-layout-shift']

}

row = {}

for v in vitals:

row[f'{v}_time'] = f'{round(vitals[v]["numericValue"] / 1000, 4)}s'

row[f'{v}_score'] = f'{round(vitals[v]["score"] * 100, 2)}%'

df = pd.DataFrame([row])

return dfIn the next step, we’ll take that PSI data and store it within a BQ database. Furthermore, we’ll need to create the dataset if it doesn’t exist, and if it does, we’ll simply print out that we’re writing data into an existing dataset.

def write_to_bq(dataset_id, table_id, dataframe):

def write_to_bq(dataset_id, table_id, dataframe):

client = bigquery.Client(location='US')

print("Client creating using default project: {}".format(client.project))

dataset_ref = client.dataset(dataset_id)

try:

client.create_dataset(dataset_id)

except:

print('Writing to an existing dataset.')

table_ref = dataset_ref.table(table_id)

job = client.load_table_from_dataframe(dataframe, table_ref, location='US')

job.result()

print("Loaded dataframe to {}".format(table_ref.path))And lastly, we need to put everything together.

if __name__ == '__main__':

parser = argparse.ArgumentParser(

description='Audit webpage on PageSpeed Insights and store results in BigQuery dataset'

)

parser.add_argument(

'-u',

'--url',

required=True,

type=str,

help='Provide URL to audit'

)

args = parser.parse_args()

dataset_id = urlparse(args.url).netloc.split('.')[0]

if '-' in dataset_id:

dataset_id = dataset_id.replace('-', '_')

strategies = ['mobile', 'desktop']

for strategy in strategies:

df = speed_test(args.url, strategy)

table_id = f'{dataset_id}_{strategy}'

write_to_bq(dataset_id, table_id, df)Alright, now that we have our script ready, we can use it by typing the following command in the command line. You will need to navigate to the project folder within your command line first.

python main.py -u www.example.com

You can also find the code for the whole project in this GitHub repository.

Conclusion

To conclude, we made a quick demonstration Python project for connecting PageSpeed Insights to Google BigQuery by leveraging their APIs. These functionalities can certainly be expanded further, to create tools for more advanced analytics.